We’ve all seen those evaluation forms at restaurants, after a customer support call, or following a training session at work. And let’s be honest, our first reaction isn’t always excitement. Often, they feel like just another box to check.

But what if we looked at them differently? Those forms are actually one of the most direct tools to drive improvement. For thoughts like “Why hasn’t anyone fixed this?” An evaluation can aid. It’s a safe, structured way to share suggestions and concerns without the awkwardness of a direct confrontation. It’s how you tell the decision-makers what needs to change.

So, to guide you, we’ll break down everything you need to know about the evaluation process. We’ll cover the basics, key sections, different types, and tips. We’ll even help you create effective digital forms with a form builder.

What is an evaluation form for, and how important are they?

An evaluation form is a simple tool to turn opinions into actionable data, be they numbered ratings or descriptive ones. It’s a structured way to gather feedback on people, programs, or services. These assessment forms are needed for almost all industries needing clarity and compliance. For instance:

- They’re often mandatory for accountability in healthcare.

- They feed directly into performance metrics and audits in business.

Having this documented record protects organizations and makes the entire process more transparent.

A good form does more than just collect data; it shows you value honest feedback and gives people a clear, neutral way to share it. This builds trust and improves communication.

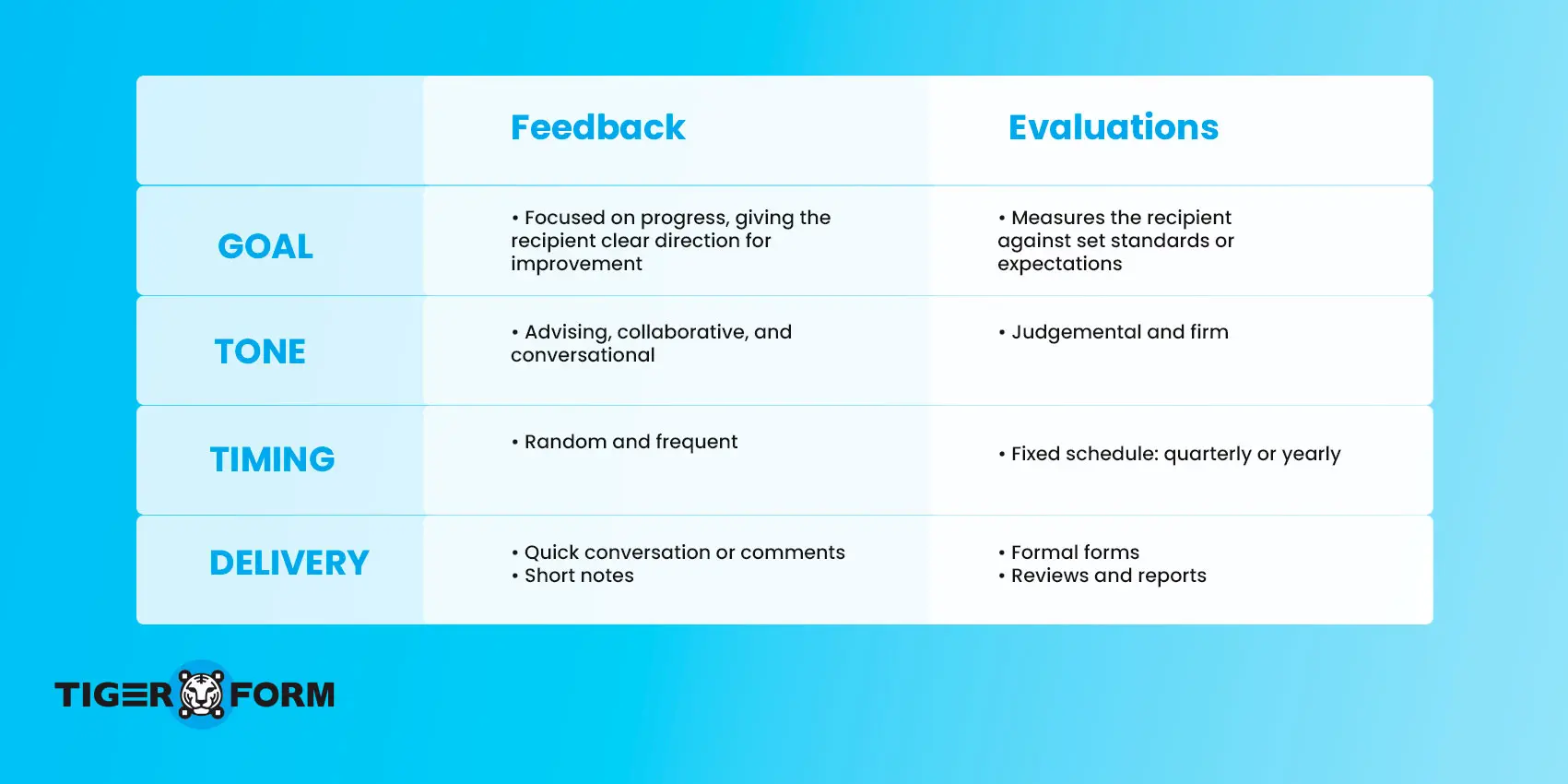

And unlike casual feedback, evaluations provide a standardized way to spot trends, compare responses fairly, and track progress over time. Let’s see below how general feedback and structured evaluations differ.

Evaluations vs. feedback

Evaluations and feedback are frequently confused since both aim to improve outcomes for a person, project, or program. But they also have differences; let’s break down their distinctions more closely:

What are the parts of an assessment form?

A strong evaluation follows a structure to guarantee that it gathers precise answers. Standard fields help evaluators to interpret results without confusion. Thoughtfully assembled, the form gathers both measurable data and personal insight. Most evaluations, regardless of industry, include the following parts:

1. Basic information

This field often includes the respondent’s name, role, and date. Sometimes, it may also include contact details. Respondents usually prefer anonymity, so these identifiers can be made optional. However, anonymous surveys or forms are not an option in formal evaluations where accountability is required.

2. Introductions and instructions

A greeting and short explanation of how to complete the form set the tone. Opening headers will help respondents feel more comfortable and guided on what to do with the form. For example, “Hi! This is the monthly employee evaluation. Rate each area of performance from 5 (highest) to 1 (lowest).”

3. Rating questions and choices

Using ratings can morph opinions into measurable data. Evaluation or review forms often center on number and descriptive scales, scoring 1 to 10 or descriptive ones such as “Poor, Fair, Good, Excellent.” Some uses structured type, likert scales, or semantic differential scales capture more nuanced patterns.

4. Open-ended questions

While scales are for comparison, open-ended questions provide deeper insights by explaining why someone rated something low or high. Qualitative responses uncover the “whys” of quantitative ones; numbers can be misread without them.

A good assessment form combines both, balancing measurable data with more profound insights from personal opinions. But remember to be moderate with open-ended questions, as they may lead to a low response rate due to survey fatigue.

5. Comment section

This field is a space for additional notes that allows participants to add thoughts outside the form’s structure. A comment section is like another open-ended question, except it’s not a question; it will enable the form filler to explain their ratings.

6. Approvals or signatures

In more formal contexts, like HR, signatures from both the evaluator and the recipient make the form official and confirm the results.

Evaluation criteria and areas to be measured

Performance and productivity

At its core, performance evaluates how well the work is done, while productivity evaluations measure how work is finished with the proper use of time and resources. For instance, Someone might meet deadlines by working overtime, but this signals a problem. So, performance and productivity must be measured together to prevent this confusion.

Knowledge and skills

Knowledge and skills represent the toolkit someone brings to their role. It’s not enough to just know theories or processes; evaluations here check if someone can apply what they learn in real-world situations. This area matters because skills and knowledge are often the foundation of long-term growth. In short, knowledge and skills evaluations prevent short-term results from masking long-term gaps.

Behavior and attitude

Behavior and attitude go beyond technical output to show how someone fits into their environment. This includes professionalism, reliability, adaptability, and respect toward others. These qualities matter because they directly shape the dynamics. A high attitude will always affect a team’s performance, in some ways and in some ways. By assessing this criterion, the administration can picture who supports the environment and who does less.

Communication and teamwork

For every team, without communication, teamwork will not come together. That’s why it’s essential to assess both communication and teamwork. If not, it often leads to missed deadlines, confusion, and team burnout. Moreover, evaluating this area reveals the natural leaders and reduces recurring conflicts.

Satisfaction and experience

This set of evaluation criteria flips the perspective outward. Instead of measuring the person being evaluated, it measures how people experience a product, service, or event. Questions on this evaluation focus on whether expectations were met, how enjoyable or helpful the experience was, and what could be improved.

Outcomes and impact

Outcomes and impact focus on the “so what” of any effort. Did the comments actually improve the skills? This area matters because it shifts attention from activity to results. Many organizations mistakenly measure success by activity levels, rather than by the actual impact of their work. But, this area’s long-term value cannot always be immediately quantified, as it often requires a follow-up or observation period to assess.

Types of rating scales to be used in evaluations

Numerical rating

A numerical rating represents different performance levels using numbers (e.g., 1-5, 1-10). It is straightforward to use, making it popular for its simplicity and the ease with which data can be analyzed and compared.

Example:

Please rate the employee’s communication skills on a scale of 1 to 5, where 1 = Needs Significant Improvement and 5 = Outstanding.

Descriptive rating

Instead of numbers, a descriptive rating measures each performance level using detailed phrases. This method reduces ambiguity and provides raters with specific behavioral examples, leading to more consistent and objective evaluations. It moves beyond a simple number to a detailed assessment.

Example:

- Exceeds Expectations: Completes excellent work ahead of time.

- Meets Expectations: Constantly delivers exceptional work.

- Needs Improvement: Struggles to meet deadlines.

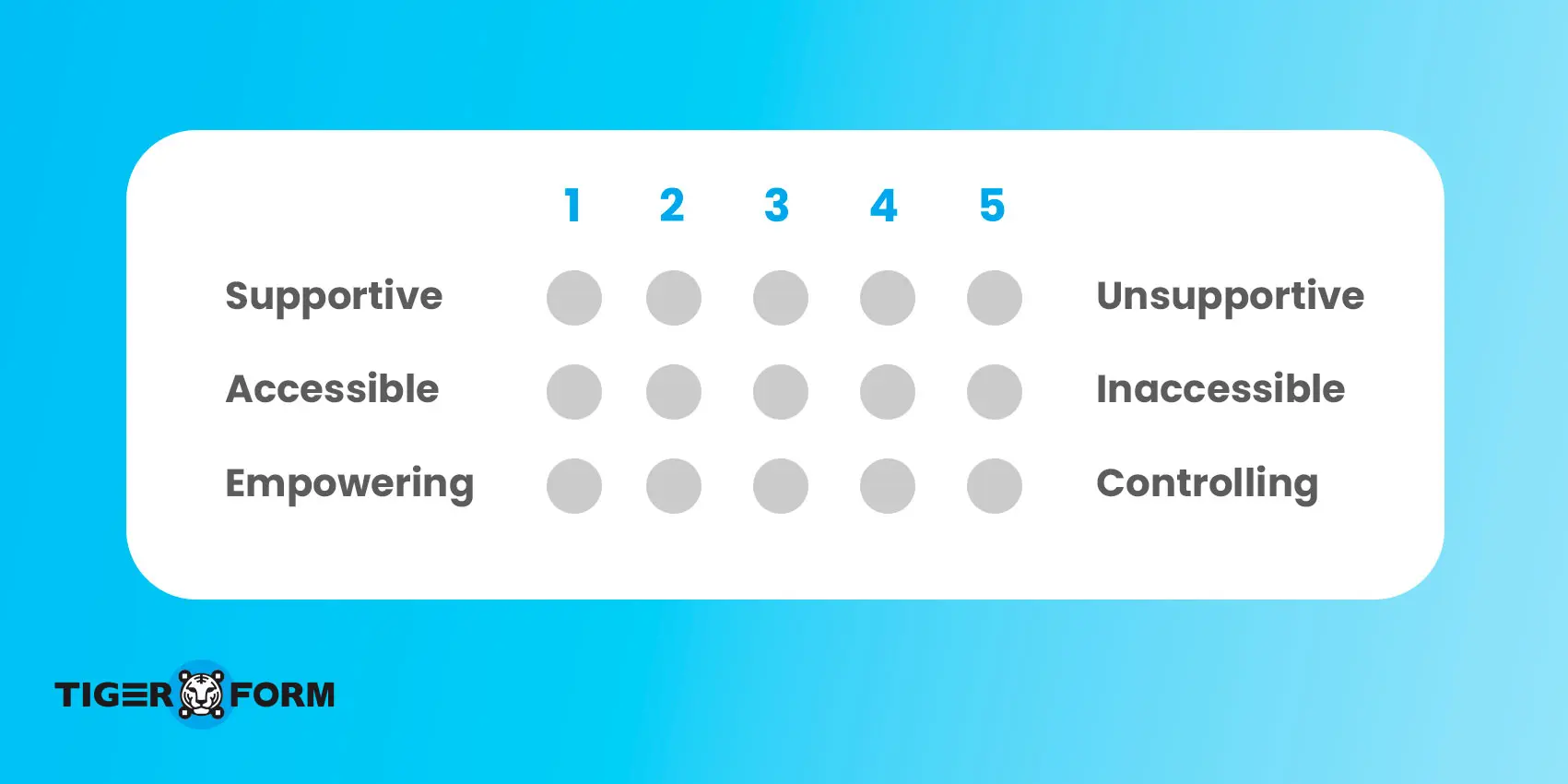

Semantic differential scale

This scale measures attitudes or perceptions by asking a person to rate a concept on a series of bipolar, or opposite, adjectives. It often uses points between the two extremes. This tool is powerful for getting nuanced feedback on a person’s behavior or a manager’s leadership style.

Example:

Likert scale

A Likert scale measures an individual’s level of agreement or disagreement with a statement. It is typically a five—or seven-point scale with options ranging from one extreme to another, often with a neutral midpoint. This scale is excellent for measuring attitudes, opinions, and soft skills.

Example:

| This employee is a reliable and supportive team member. ☐ Strongly disagree ☐ Disagree ☐ Neither agree nor disagree ☐ Agree ☐ Strongly agree |

Graphic rating

A graphic rating is a visual representation of a likert scale. It often uses numbers or descriptive labels by showing a trait or behavior and giving a line or scale where the rater marks their answer. The points on the line are often labeled with numbers, descriptions, or both. This allows for both a quick visual assessment and a precise rating.

How to make an evaluation form in minutes

Making an assessment form doesn’t have to be difficult. With digital form creators, you can set one up in minutes without knowing any coding skills. The starter guide below will walk you through creating an online form that can be shared easily with QR codes.

Step 1

Start by defining the purpose. Is the form meant to measure satisfaction, performance, or learning outcomes? Having clarity prevents you from overloading the form with unnecessary questions.

Step 2

Next, sign up for TIGER FORM creator, a specialized QR code form platform that allows you to build and share forms quickly.

Step 3

After signing up, add easier questions and choice fields before the detailed items. Place open-ended questions at the end, so participants can elaborate after rating.

Step 4

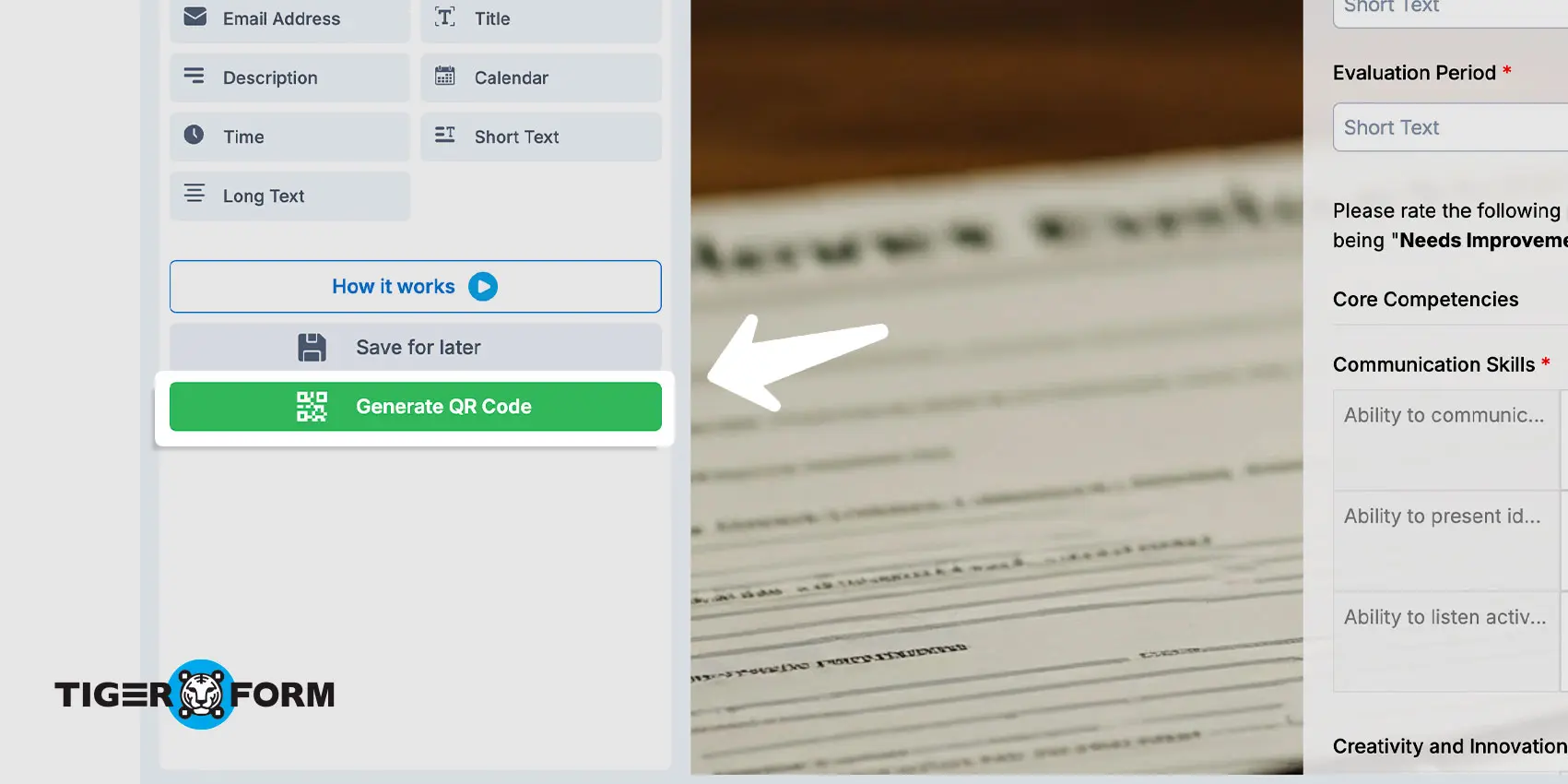

After adding all the fields, preview the form to finalize details.

Step 5

Next, generate your QR code by clicking the “Generate QR code” button. A pop-up will appear where you can customize the QR code, from patterns to frames.

Step 6

Afterward, you can download and digitally share the QR code or print it for on-location campaigns.

Step 7

Track responses and scans in the builder’s dashboard. You can see how often your form was scanned or submitted, along with details like location, device, and country.

Types of evaluations by applications + form templates

Assessment forms are not one-size-fits-all. Different industries and settings need different templates depending on their goals. Below are some of the primary assessment forms commonly used, with insights on where they fit best and templates you can use as starting points.

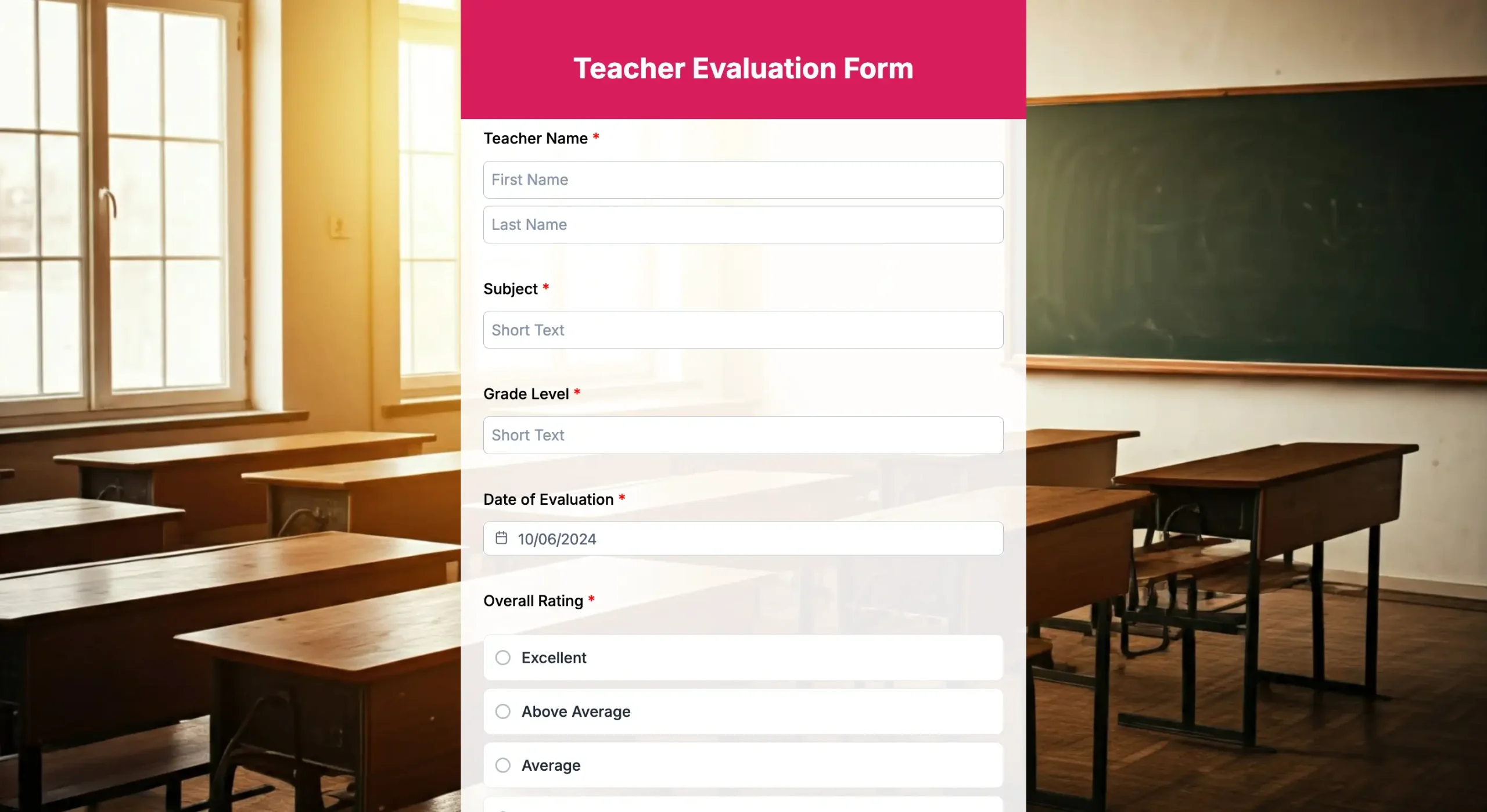

Employee evaluations

These are among the most widely used evaluation tools. These assessments usually cover productivity, teamwork, communication, and goal achievement. This type is not only for yearly reviews but also for probation checks, promotions, and performance tracking.

And, with the help of a well-designed employee evaluation form, fairness and transparency in the workplace are supported, giving employees a clear picture of where they stand.

Related template:

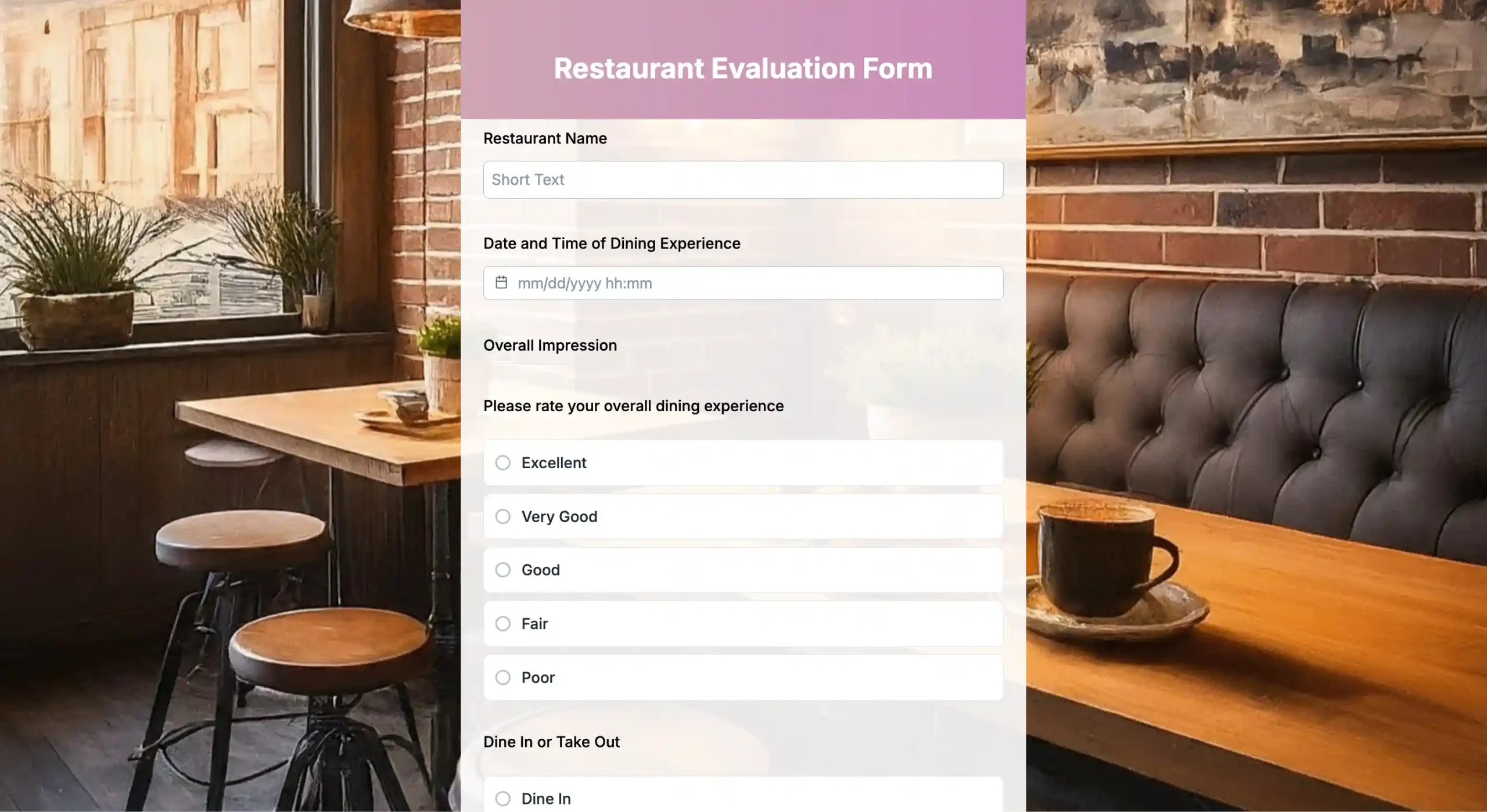

Customer evaluations

Customer evaluations measure satisfaction with a company’s products or services. They usually include questions about service quality, ease of user experience, and likelihood of repeat business. Customer evaluations spot weak points before they affect loyalty.

Related templates:

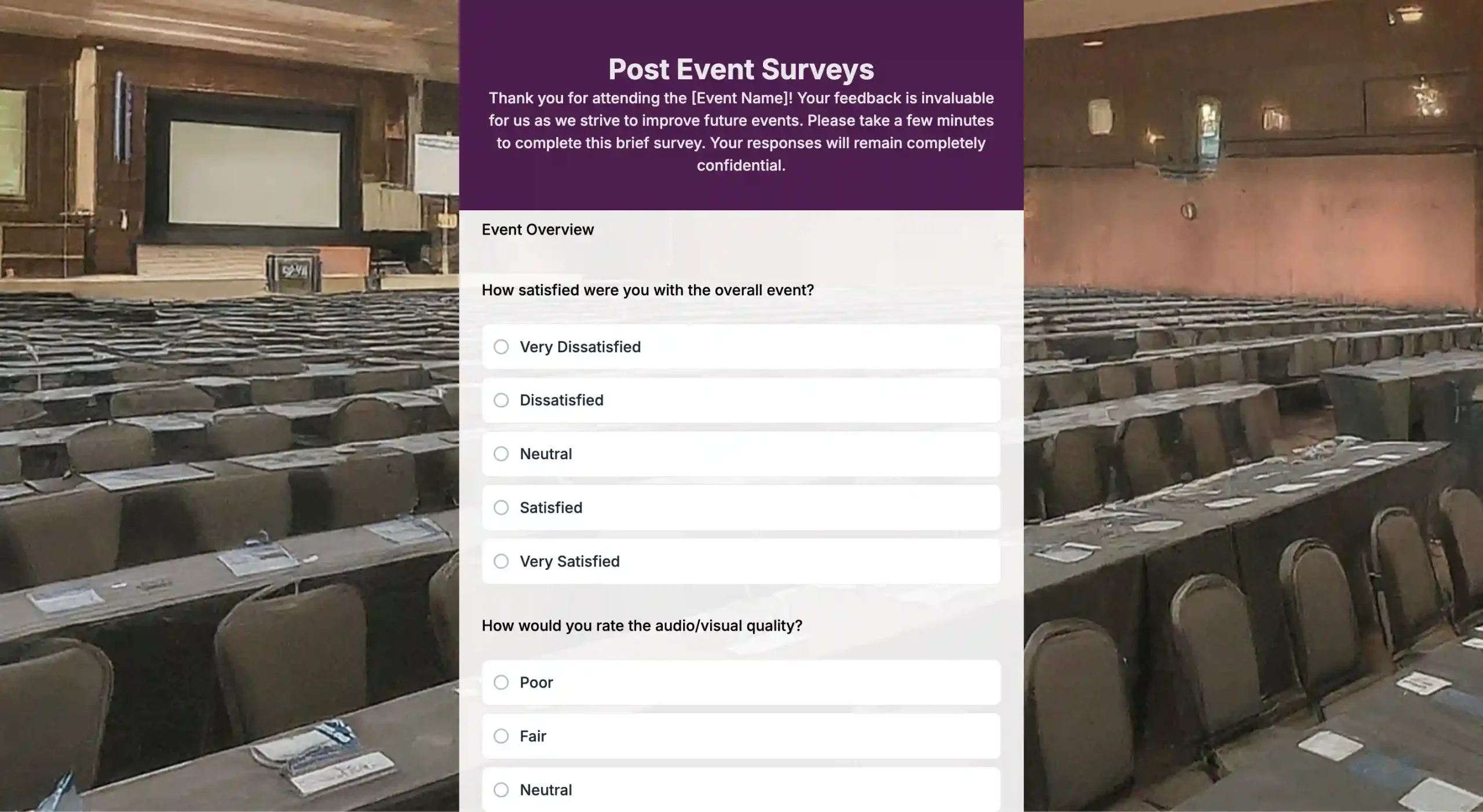

Event evaluations

Events, whether professional conferences or casual community gatherings, benefit from structured feedback. Event evaluation forms track attendee satisfaction with logistics, content, speakers, and overall value. They give organizers data to refine future events and improve turnout.

Related templates:

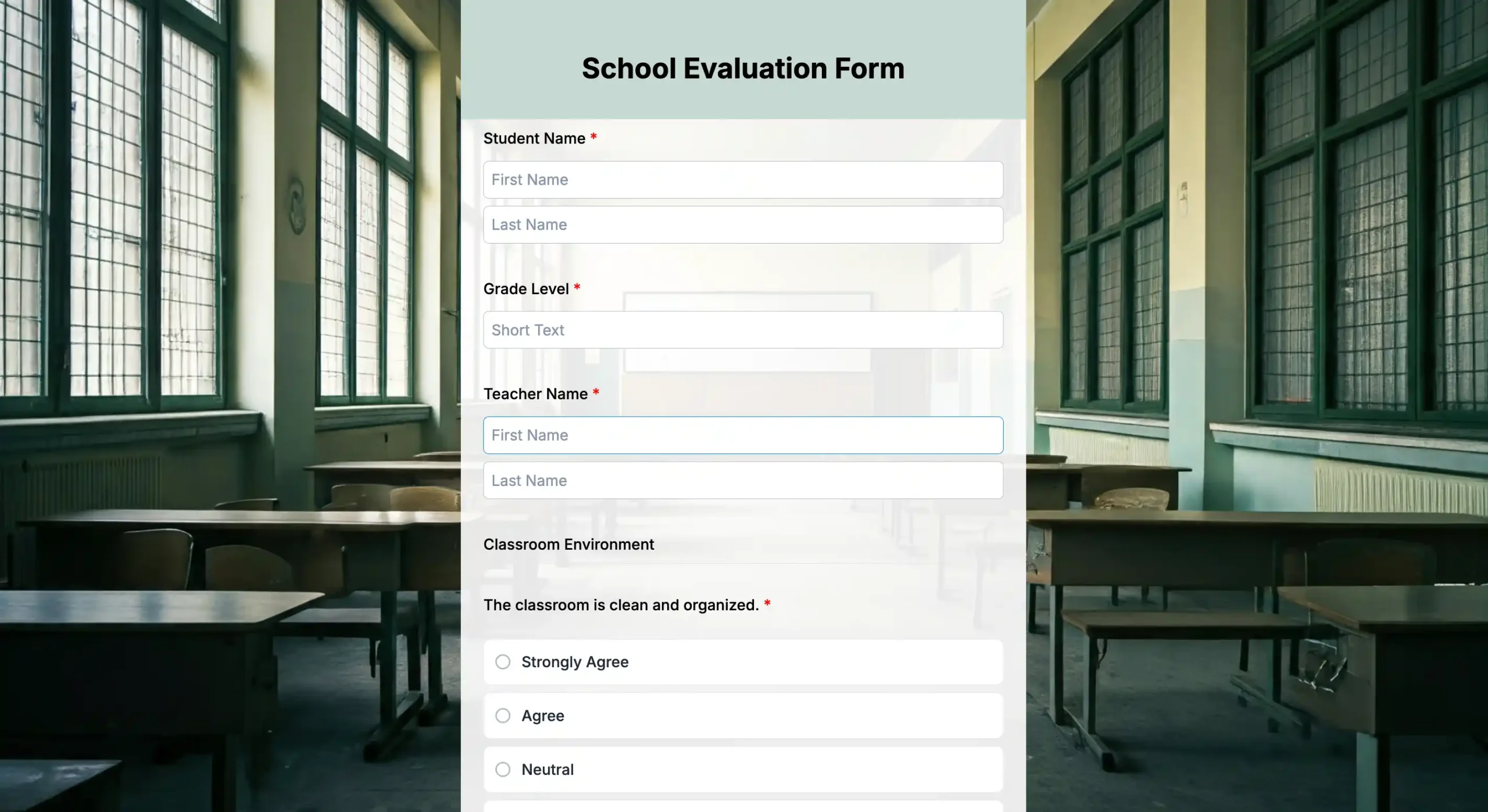

Peer-based evaluations

Peer evaluations are often used in both academic and workplace settings. They allow colleagues or classmates to give feedback on teamwork, accountability, and contribution. This type of evaluation balances the view of supervisors with the lived experience of collaborators, offering a fuller perspective.

Related template:

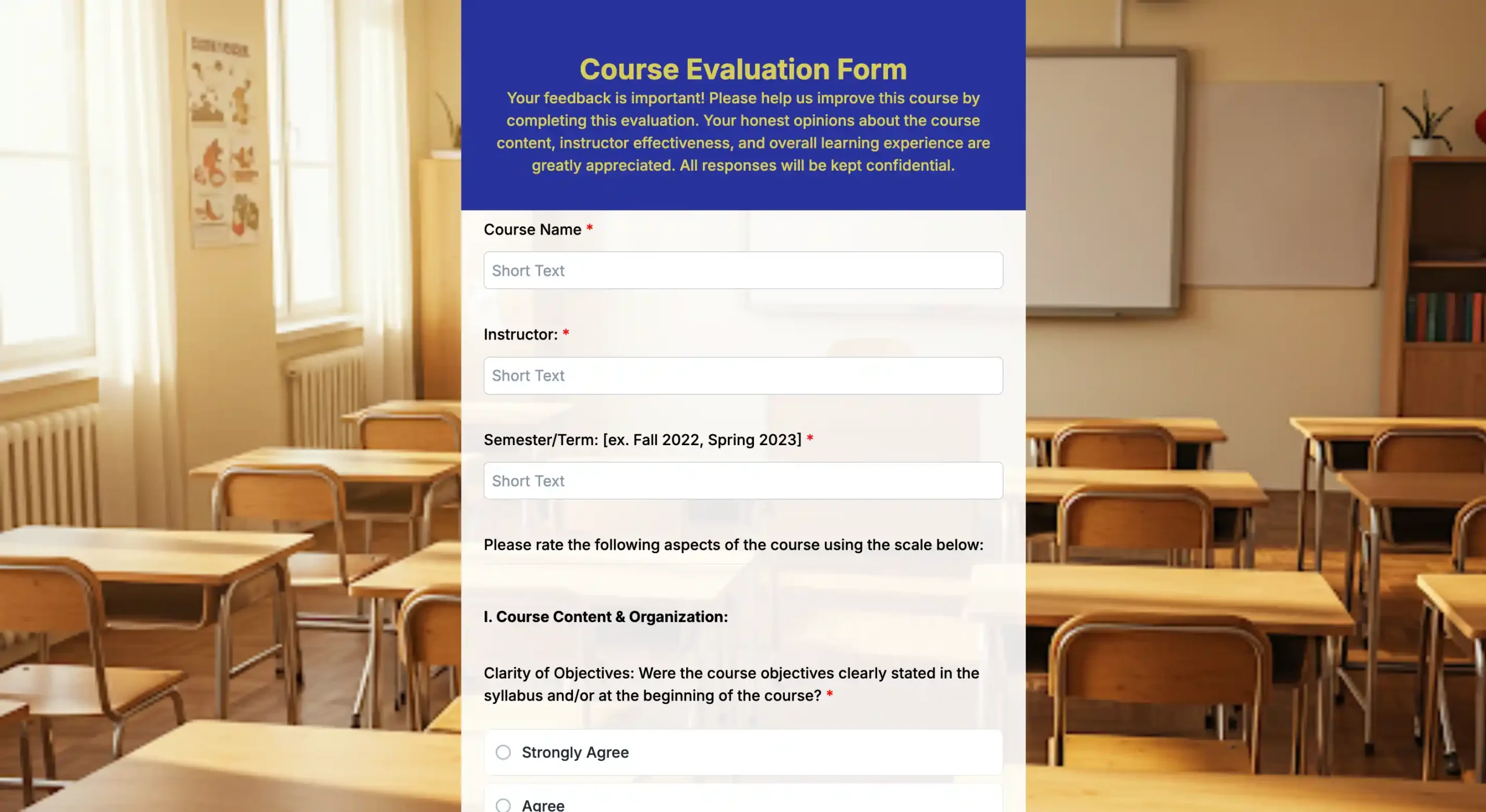

Training and course evaluations

Training and courses only work if they meet learning goals. These forms check if the material was useful, the pace was right, and the trainer or teacher was effective. They help schools and organizations know what to improve and whether learners are ready to move forward.

Related templates:

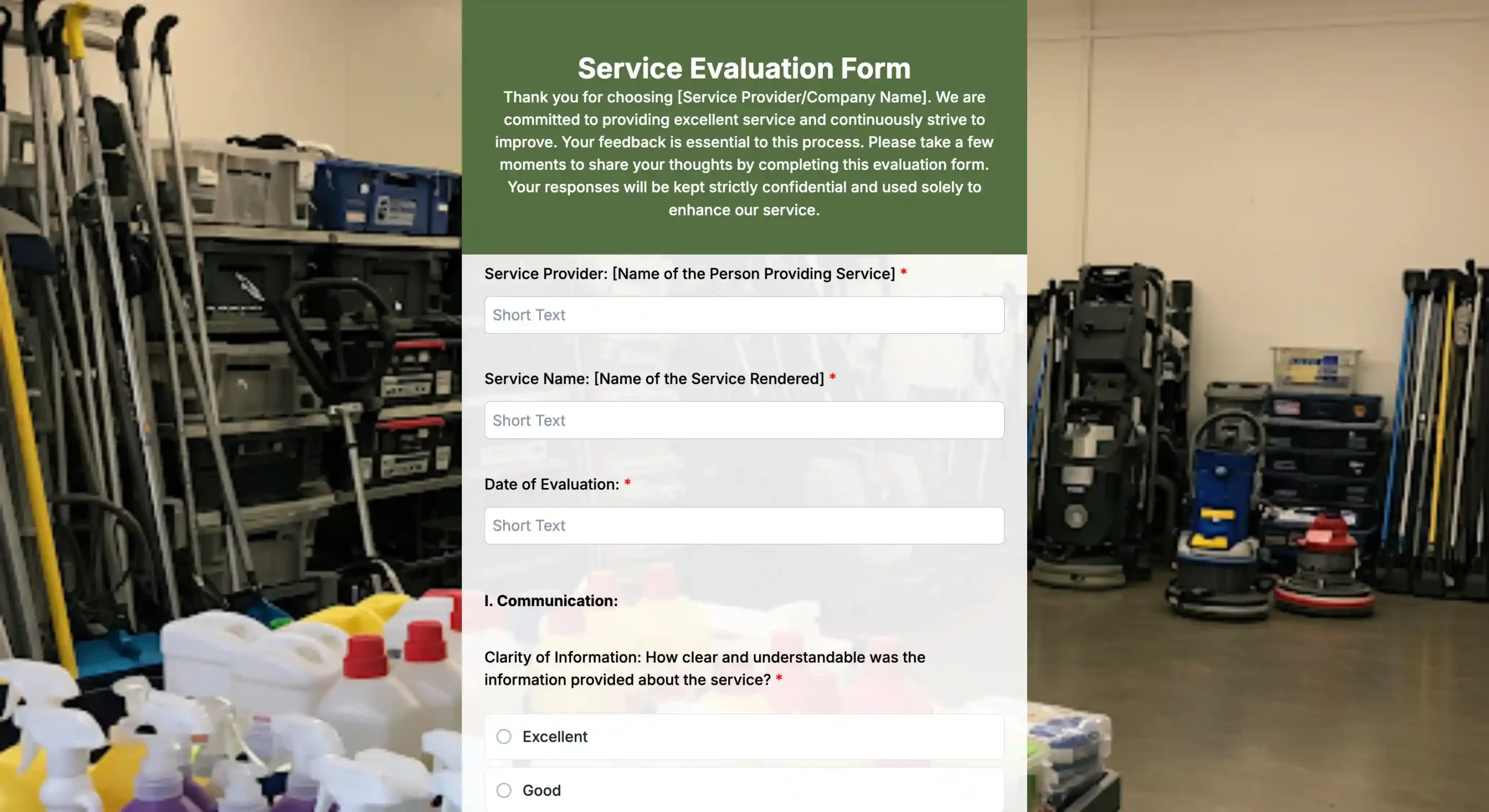

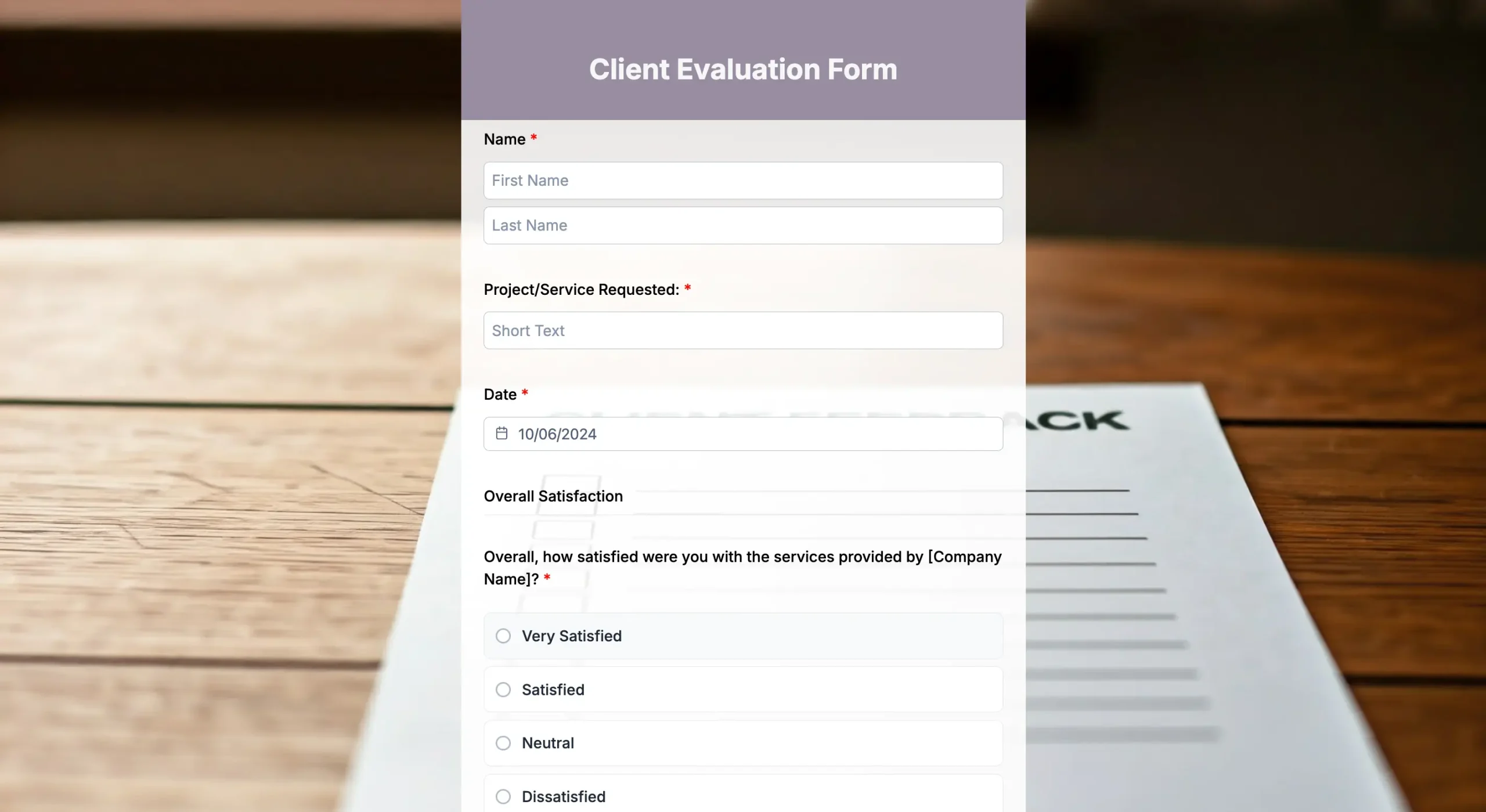

Client and customer evaluations

Client evaluations are separate from general customer forms and are often used in professional services like consulting, law, marketing, or customer service. These forms track satisfaction with deliverables, communication, and professionalism. They help businesses strengthen long-term customer relationships by showing they value client input.

Related template:

Performance Improvement Plans (PIP)

These forms are used when an employee’s performance requires structured intervention. Unlike general evaluations, PIP forms set specific goals, timelines, and progress metrics. They don’t just evaluate; they create an improvement roadmap.

Self-assessments

Self-evaluations invite individuals to reflect on their performance, strengths, and areas for growth. These forms are often paired with employee or peer reviews to encourage accountability and self-awareness. When done honestly, they help align personal and organizational goals.

Effectiveness evaluations

Did that new project or initiative actually work? This evaluation is less personal and more of a big-picture assessment of whether our efforts deliver the expected results and value for the time and money we invested. It’s how we make wise decisions for the future.

50 sample evaluation questions for different criteria

- Employee evaluations

- Did the employee meet assigned goals?

- How well did they manage deadlines?

- Did they show initiative in problem-solving?

- Were communication skills clear and professional?

- Did they adapt well to changes?

- How consistent was their work quality?

- Did they contribute to team success?

- How well did they manage time and resources?

- Customer evaluations

- How satisfied are you with the service/product?

- Was the process easy and convenient?

- How would you rate the quality overall?

- Did staff handle your needs professionally?

- How likely are you to return?

- How likely are you to recommend us to others?

- Was the pricing fair for the value received?

- Did you encounter any issues during your experience?

- How quickly were your concerns addressed?

- Event evaluations

- Was event registration easy to complete?

- How satisfied were you with the event organization?

- Were the sessions or activities valuable?

- How would you rate the speakers or facilitators?

- Was the venue comfortable and accessible?

- Would you attend another event with us?

- How relevant was the content to your needs?

- What part of the event could be improved?

- Training and course evaluations

- Were the learning objectives clear?

- Was the trainer/teacher knowledgeable?

- Was the pace of the course appropriate?

- Were the materials and resources functional?

- Did the training meet your expectations?

- Did the course improve your skills or knowledge?

- Was there enough opportunity for questions or discussion?

- Would you recommend this training to others?

- Performance Improvement Plans (PIP)

- Did the employee understand the improvement goals?

- Were the goals realistic and achievable?

- Did the employee make progress toward the set goals?

- Was the effort consistent during the plan period?

- Did management provide enough support?

- Were deadlines for progress met?

- Did the employee apply feedback effectively?

- Did performance improve compared to before?

- Is the employee ready to exit the PIP?

- Self-assessments

- Did you meet your personal goals this period?

- What do you consider your biggest strength?

- What areas do you feel need improvement?

- Did you face challenges that limited your performance?

- How did you handle those challenges?

- How did you contribute to team success?

- What are your goals for the next period?

- What support do you need from your manager?

Practical tips for creating assessment forms

- Keep your forms short and clear.

This should include clear language and form structure; keep the form language and structure easy to follow, including for the newbies. But there are deeper, less obvious strategies that can transform how well your online form works. - Rotate or rephrase questions.

If you repeatedly use the same form, people may start answering automatically, or it may cause survey fatigue. To avoid this, alternate or rephrase the type of questions and the choice field. - Add small feedback sections during the process.

Embed micro-feedback points instead of saving everything for the end. This helps you get fresher, more specific responses. Waiting until the end often results in vague answers. - Ask for feedback right after the experience.

People give better feedback when the experience is fresh. Handing out course evaluations right after a class or asking for restaurant feedback before customers leave increases response accuracy. - Match scales to the question’s context.

You can also customize evaluation scales to suit the context. For instance, a descriptive rating scale works well for employee evaluations, as it provides structured feedback. A Likert scale is more likely to yield consistent and comparable data for customer surveys. - Use branching questions.

Conditional questions make evaluations smarter and less burdensome. Conditional branching serves as a follow-up to get deeper insights without overwhelming everyone with unnecessary details. - Share results and actions taken.

Closing the loop will help your cause or brand gain people’s trust, which most organizations miss. Participants are far more likely to trust and fill out your form if they know their feedback leads to action.

Deliver better evaluations with well-designed forms

Evaluation forms may seem simple, but their impact is vast. They hone decisions, guide growth, and reveal insights that casual feedback cannot capture. Yet, honest responses may drop without the proper form structure design, leading to unguided decisions.

But that doesn’t have to happen; use the guide and tips we shared above to revamp your form or create better assessment forms. You must start with the form if you want evaluations that people take seriously and deliver meaningful data. Create your forms with TIGER FORM now!

FAQs

How long should an evaluation be?

An assessment form should be short enough to keep people engaged but detailed enough to provide valuable insights. A good balance is around 5 to 15 questions, taking no more than 10 minutes to complete. A mix of rating scales, multiple-choice questions, and a few open-ended questions works best.

How do you measure the results from an evaluation?

Start by looking at averages from rating scales to spot trends. Compare scores over time to see progress or decline. Organize feedback by categories such as productivity, communication, or service quality, then review open-ended answers for details that numbers can miss. The goal is to turn results into clear action points.

What are common mistakes when creating assessment forms?

The biggest mistakes are making the form too long, asking vague or leading questions, and failing to align questions with your goals. Overlooking anonymity when it matters and collecting feedback without acting on it are also common problems.